Consulting, Dynamics AX Performance

How much database size is too much for good Dynamics AX Performance?

Database size is our ultimate boxing match opponent for Dynamics AX Performance. Time and Time again, I’ve knocked it down, but it never stays down for the count. And when it gets back up, it can deliver a crushing blow that changes an implementation from fast to slow seemingly overnight. Without data archiving, it always comes back. However, data archiving (not the built-in data management jobs) require an upfront investment that isn’t small.

Previously, we had discussed some of the issues with Dynamics AX Performance tuning but I left the discussion of size for this post:

The Managers Performance Tuning Survival Guide for Day 1 Slow Dynamics AX Performance

The Real Challenge with Size in regards to Dynamics AX Performance

If you are new to why size changes so much then I’d like to explain it. See, all data retrieval algorithms share a very important assumption, which is the amount of data that must be retrieved. The algorithms become incorrect when the size is not within the expected range. In Dynamics AX, you not only must worry about the database but also the code which performs according to the amount of data that it is handling. This can drive somebody crazy if they get the database performing well but ignore the code.

But size is always changing and going up in the long-term

That’s why I say that size is like the ultimate opponent. Just when you get AX all tuned and running well, it will hit a certain size, and things will go slow again. This is the central paradox of performance. You are always adjusting the inputs into data retrieval algorithms through things like indexes, partitions, compression, statistics, etc. Thus, we get to the real question with size and how to manage it.

The real challenge with the size is at what point do all the standard mechanisms for database retrieval become too expensive

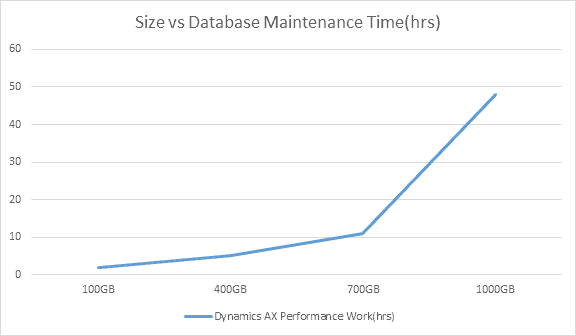

There is a tradeoff curve. Notice how the amount of time spent on performance tuning to maintain operations really goes up as the database gets larger. It starts out innocently enough at 2 hours a week but then hits over 40 hours a week once the database reaches a terabyte in size.

This is pretty close to how the size vs performance breakdown works, but the correlation is much stronger in regards to Dynamics AX Performance for the size of specific tables from my experience rather than the size of the database.

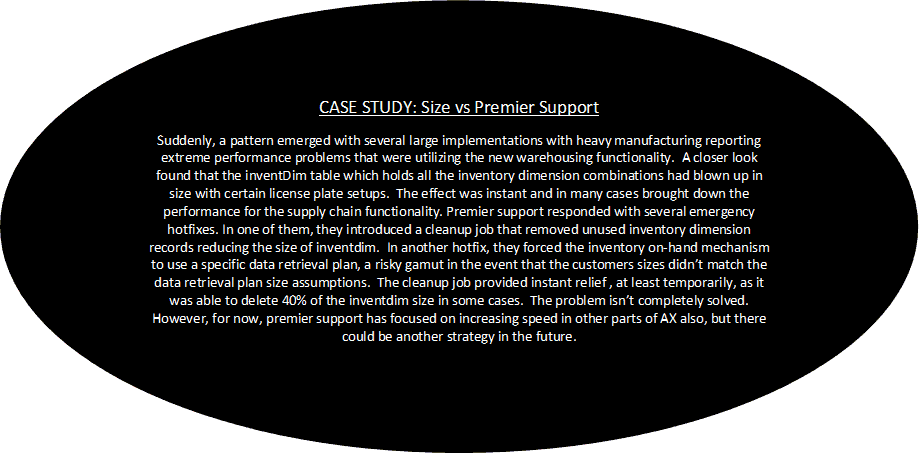

Case Study: Premier Support VS the new Warehouse Functionality

The case of the slow supply chain performance was caused by many implementations using heavy numbers of license plates when the new functionality came out. This greatly increased the size of the Inventdim table and broke down queries across the board. Notice how premier support temporarily solved the issue with a database cleanup job and a hardcoded data retrieval plan. This approach brought time. Meanwhile, many implementations increased their performance maintenance time work to compensate for the extra hit to performance as Inventdim records eventually grew back to their previous sizes.

Which was the right approach?

So, what was the right approach here? Was it data archiving or was it increased performance maintenance? It depends. The reason for this is because data archiving is an investment.

True Data Archiving when done right should take around 3 to 6 months for an Enterprise system with testing

There are a few concerns with data archiving. You have to test the heck out of the Intelligent Data Management Framework to ensure that the archiving doesn’t compromise your database. Any time you remove data, this is a concern. You don’t know if data integrity will remain amongst all customizations. Furthermore, data archiving is largely a custom code exercise for many tables. And finally, you have to ensure that the users have access to the archived data in an acceptable way. All of this equals concerns. In most cases, count on about three to six months to finish a data archiving project as an investment in that the cost of data archiving work will save on future performance tuning work.

Cost vs Benefits Analysis: are there so many performance problems that you need to reduce the size of the database or is the performance tuning maintainable?

That’s the question and it’s really one that every implementation has to answer on their own. I typically get alarmed when performance tuning is taking over 33% of the employee time.

Where to start:

Immediately Start Tracking the Size of the Database and the Tables

What you really need is a baseline. You should be capturing your table’s sizes weekly in SQL and storing them somewhere. Then you need to approximate how much of your time is spent on performance issues. You’ll start to notice that certain tables have growth rates directly correlated with where you are spending most of your time performance tuning. That will help you make a decision on where you need to performance tune.

In Summary, hopefully, this post cleared up the decisions involved on whether to undergo an archiving project or not. Archiving will stabilize work, but like anything else, it does involve an investment, and not every table can have its data easily archived. Still, it’s a key critical decision that nearly every implementation will face if they grow big enough.

9823

9823